The FAIR extension: A web-browser extension that streamlines FAIR metrics evaluations for use in Researcher Assessment

The scientific community's efforts have increased regarding the application and evaluation of the FAIR principles on Digital Objects (DO) such as publications, datasets, or research software. Moreover, an increasing number of academics have achieved FAIRness; however, when trying to demonstrate these efforts, it can get somewhat abstract when it comes to academic assessments within the context of Recognition & Rewards. Open available automated FAIR metrics evaluation tools have been used in the Open Science community, such as FAIR enough, the FAIR evaluator, or FAIRsFAIR's F-UJI. Moreover, Digital Competence Centers have been paramount in this process by facilitating a range of activities, such as awareness campaigns, trainings, or support.

However, in practice, using the FAIR metrics evaluation tools is still an intricate process for the average researcher. It requires a steep learning curve since it involves performing a series of manual processes requiring specific knowledge, which led to disengaging some researchers in the process. Moreover, there is no link between the FAIR evaluation and how the researcher can use it for their research assessments. We aim to use technology to close this gap and make this process more accessible by bringing the FAIR metrics evaluation to the researcher's profiles.

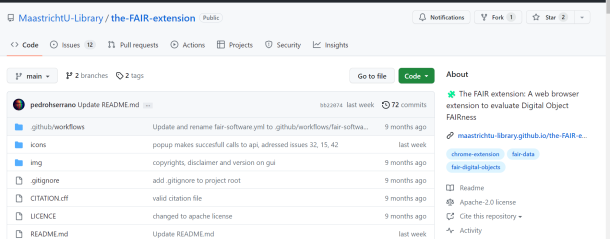

We developed "The FAIR extension", an open-source, user-friendly web browser extension that allows researchers to visualize a FAIR metrics evaluation directly at the web source and export simple reports. Web browser extensions have been an accessible digital tool for libraries supporting scholarship. Moreover, it has been demonstrated that they can be a vehicle for Open Access, such as the Lean Library Browser Extension.

The FAIR extension is a tool that builds on top of the community-accepted FAIR evaluator APIs, i.e., it is explicitly not creating yet another FAIR metrics evaluation framework from scratch. The objective of the FAIR Digital Objects Framework (FDOF) is for objects published in a digital environment to comply with a set of requirements, such as identifiability, and the use of a rich metadata record. The FAIR extension connects via REST-like operations to individual FAIR metrics test endpoints, according to Wilkinson et al. (2019), and displays the FAIR metrics on the client side. Ultimately, the user will get FAIR scores of articles, datasets, and other DOs in real-time on a web source, such as a scholarly platform or DO repository. With the possibility of creating simple reports of the evaluation, researchers can use it for individual academic assessments within the context of Recognition & Rewards.

Organisations involved

Presenters